news

2020 and Earlier

| July 2020 |

I (virtually) attended my first conference, ICONS, and presented a poster on spiking neural networks |

|

2024 |

|

Robot-assisted Inside-mouth Bite Transfer using Robust Mouth Perception and Physical Interaction-Aware Control

Rajat Jenamani, Daniel Stabile, Ziang Liu, Abrar Anwar, Katherine Dimitropoulou, Tapomayukh Bhattacharjee

We design a system to feed people with disabilities in their mouth using real-time mouth perception and tactile-informed control.

HRI. (Best paper nominee), 2024

|

2023 |

|

Exploring Strategies for Efficient VLN Evaluation

Abrar Anwar*, Rohan Gupta*, Elle Szabo, Jesse Thomason

Evaluation in the real world is often time-consuming and expensive, so we propose a targeted contrast set-based evaluation strategy to efficiently evaluate the linguistic and visual capabilities of an end-to-end VLN policy.

Workshop on Language and Robot Learning (LangRob) @ CoRL. (Oral Presentation), 2023

paper

|

|

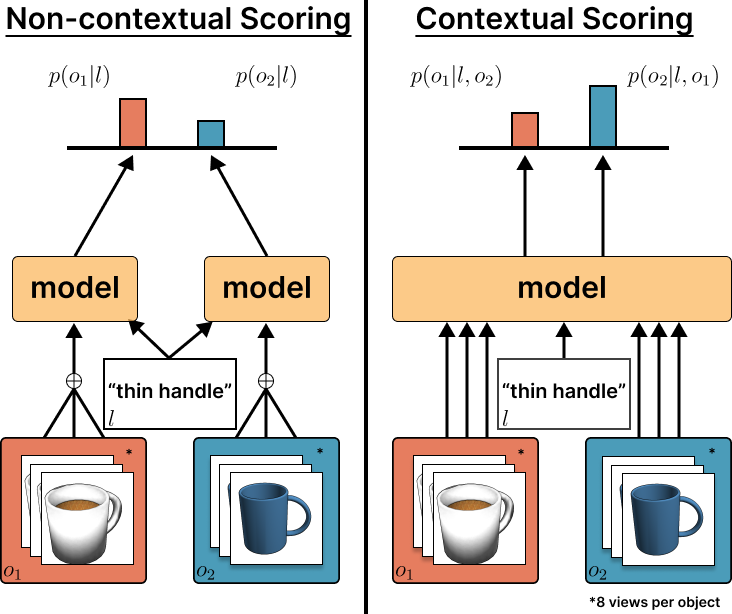

Comparative Reasoning for Multi-View Language Grounding

Abrar Anwar, Chancharik Mitra*, Rodolfo Corona*, Dan Klein, Trevor Darrell, Jesse Thomason

We present the MAGiC model which selects an object referent based on language meant to distinguish between two similar objects by reasoning over both objects from multiple vantage points.

In review, 2023

|

2022 |

|

Human-Robot Commensality: Bite Timing Prediction for Robot-Assisted Feeding in Groups

Jan Ondras*, Abrar Anwar*, Tong Wu*, Fanjun Bu, Malte Jung, Jorge Jose Ortiz, Tapomayukh Bhattacharjee

We develop data-driven models to predict when a robot should feed during social dining scenarios.

We build a dataset of human-human commensality, develop novel models to learn social dynamics of when to feed, and conduct a human-robot commensality study.

CoRL, 2022

paper

arxiv

|

2021 |

|

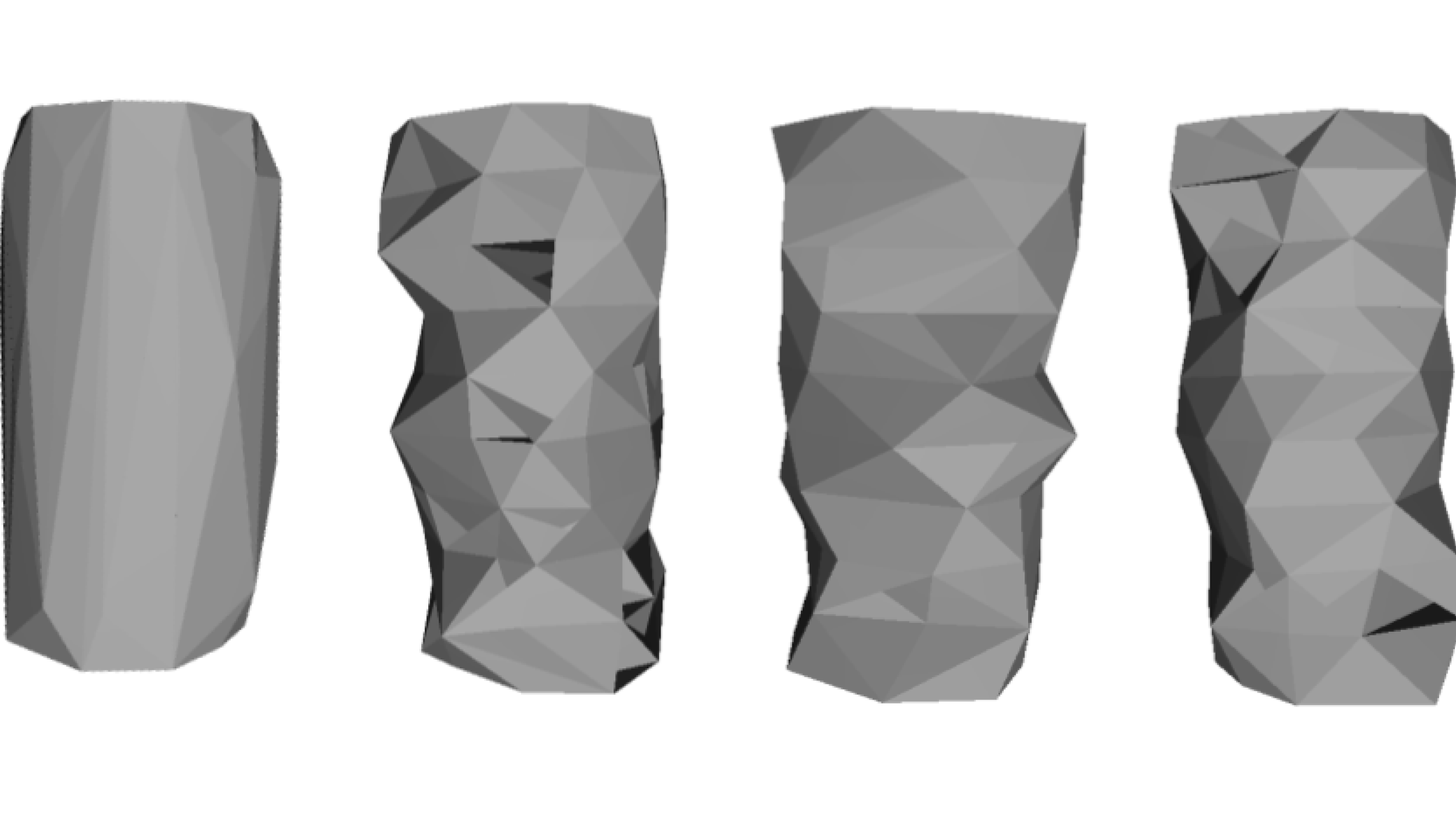

Deep Reinforcement Learning for Optimal Refinement of Cross-Sectional Mesh Sequence Finite Elements

Abrar Anwar

Developed the first deep reinforcement learning framework for mesh refinement and refined “good” quality surface reconstructions of cross-sectional contours using soft-actor critic

Honors Thesis, 2021

|

|

Watch Where You're Going! Gaze and Head Orientation as Predictors for Social Robot Navigation

Blake Holman, Abrar Anwar, Akash Singh, Mauricio Tec, Justin Hart, Peter Stone

We leverage virtual reality to collect gaze and position data to create a predictive model and a mixed effects model to show gaze orientation precedes other features

ICRA, 2021

paper

video

|

2020 |

|

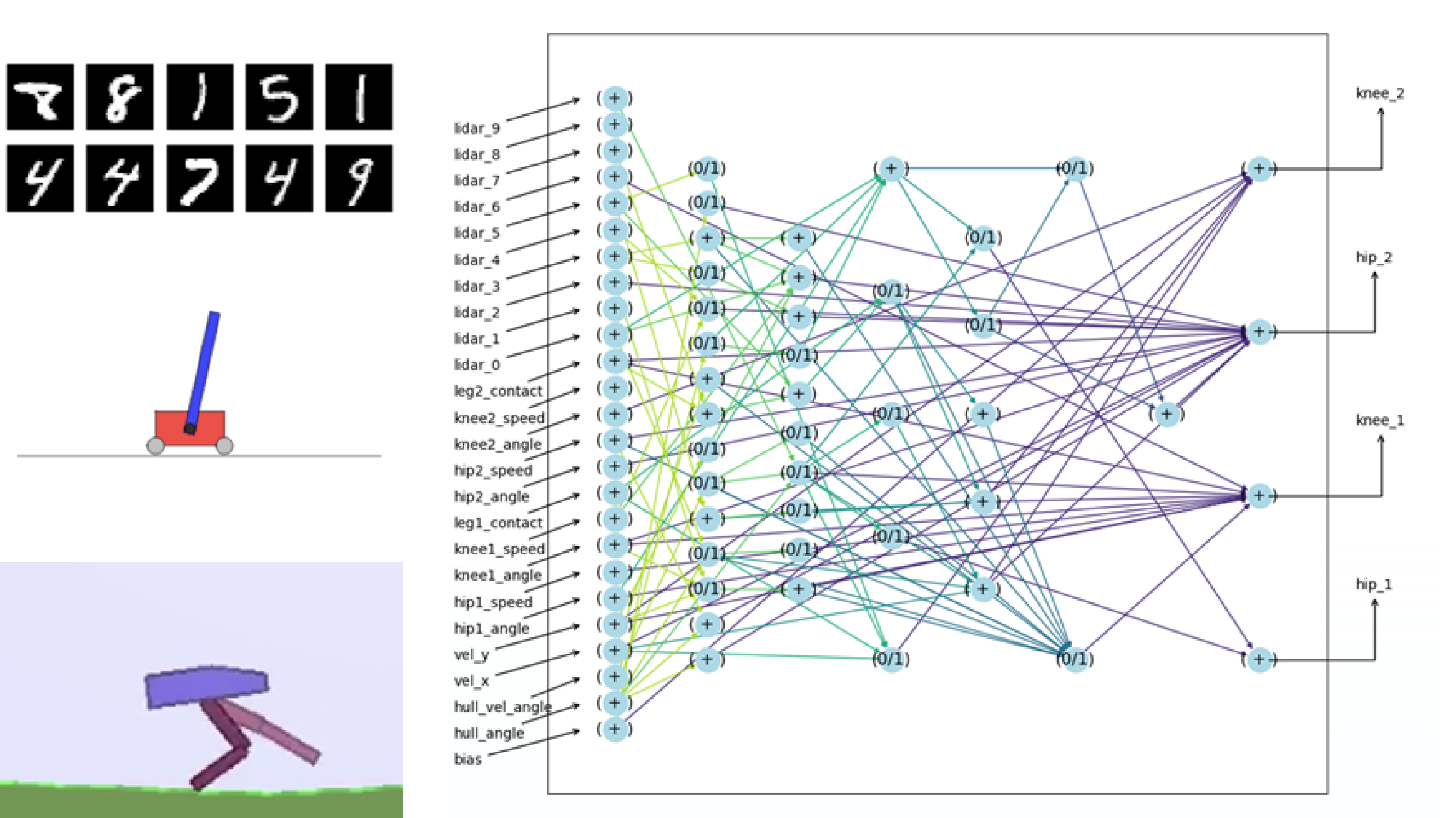

Evolving Spiking Circuit Motifs using Weight Agnostic Neural Networks.

Abrar Anwar, Craig Vineyard, William Severa, Srideep Musuvathy, Suma Cardwell

An evolutionary, weight agnostic method is used to generate spiking neural networks used for classification, control, and various other tasks

AAAI-21 Undergraduate Consortium , 2021

Computer Science Research Institute Summer Proceedings, 2020

International Conference on Neuromorphic Systems (poster), 2020

paper

tech report

poster

|

2019 |

|

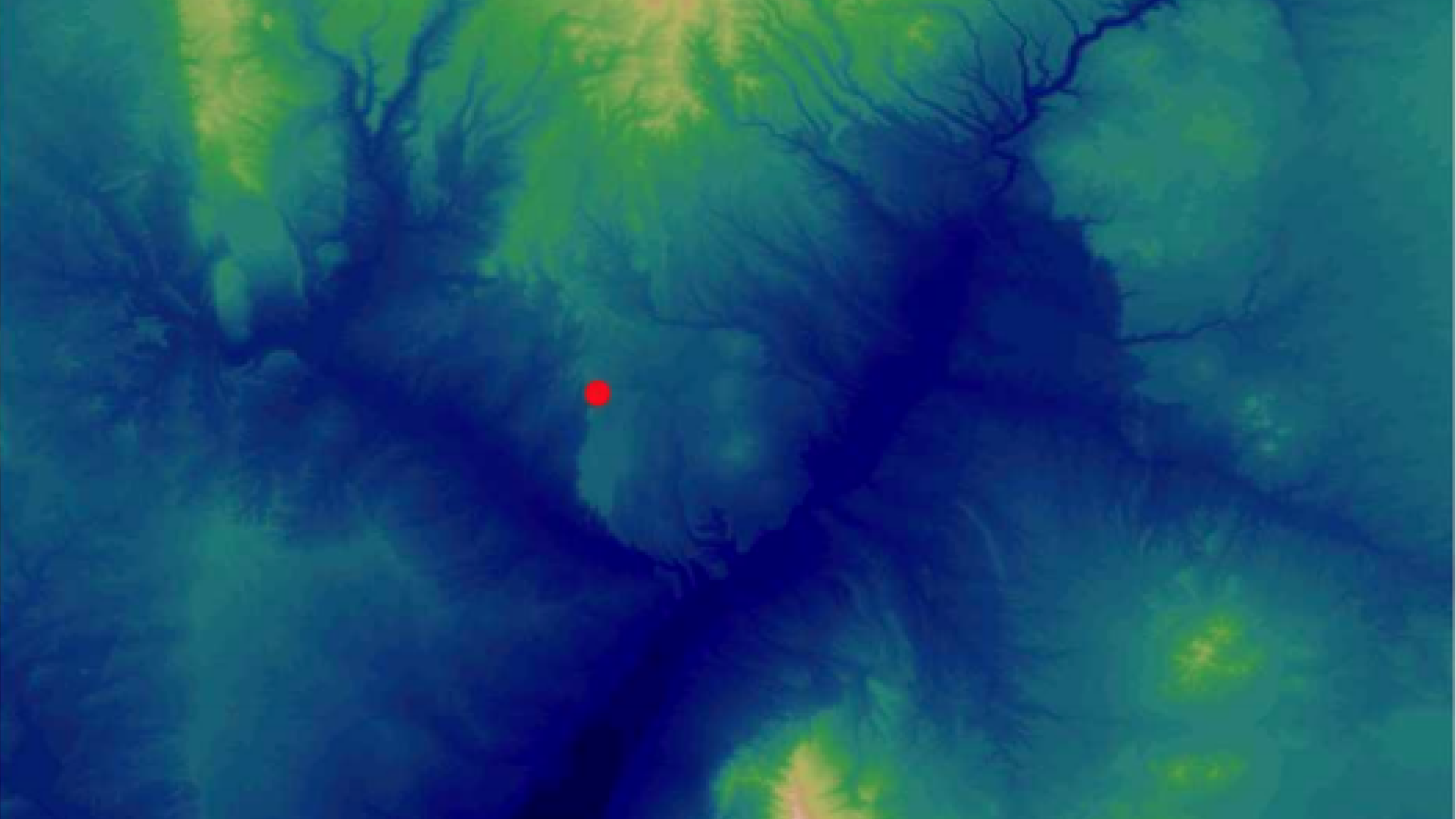

BrainSLAM: Robust autonomous navigation in sensor-deprived contexts

Felix Wang, James B. Aimone, Abrar Anwar, Srideep Musuvathy.

We explore using brain-inspired approaches to navigation and localization in a noisy, data-sparse environment for a hypersonic glide vehicle. Rotation invariant feature representations are used to increase accuracy and reduce map storage

Sandia National Labs Technical Report, 2019

tech report

|

|